The technological singularity scenario anticipates an exponential improvement in technology, especially artificial intelligence, which would create superhuman intelligence and transform the world into a science fiction-like society. It is debated whether such a trend can currently be observed. An introduction to the debate about the coming, non-coming, of the singularity by reading the essay exchange between the well-known "singularist" Ray Kurzweil and Paul Allen (founder of Microsoft, now turned philantropist):

- Kurzweil's The law of accelerating returns written in 2001.

- Paul Allen's reply The singularity isn't near in MIT Technology Review 2011.

- Kurzweil's reply: Don't underestimate the singularity in the same magazine.

A recent overview of the debate may be found in a recent report by Amnon H. Eden, The Singularity Controversy, Part I: Lessons Learned and Open Questions: Conclusions from the Battle on the Legitimacy of the Debate.

So is the singularity coming? Fundamentally, an AI explosion must, to some degree, be driven by the underlying computing technology, and we should be able to track the progress of computer technology and see if the development is exponential. I will argue below, that while there was still reason for optimism in 2011, the last 5 years have actually seen a deceleration in hardware technology, despite great improvements in consumer products like smart phones. I will look at the state of computing power/speed, storage, and network technology. Of these, only networking technology is still on an exponential path with much potential improvement, the others are quickly approaching their physical limits.

Computational power

In the terms of computational power, it is absolutely clear by now that Moore's law of semiconductor manufacturing is coming to an end. This is important because miniaturisation has been a main driver of the computing revolution. Although Moore's law was originally used as an argument for the coming singularity, today it typically used as an argument against reaching it, because we are approaching the physical limit in the current semiconductor technology. In order for the singularity to happen, we need a completely new type of underlying computing technology. A few years ago, only ardent pessimists would come out as non-believers in the perpetual improvement of computer processors, but many industry insiders would readily admit the problem of costs of processor manufacturing not going down as expected. The charade finally ended when Intel, the leading semiconductor company, was forced to publicly announce that they are delaying their new 10 nanometer manufacturing process to late-2017 and changing their product plans. My interpretation of their decision is that they are facing unexpectedly higher costs of production and need to amortize them over one more product cycle in order to generate enough profit. According to information given at Intel's investors meeting in 2015 the cost per square mm of chip area is growing exponentially (slide 6) and the manufacturing efficiency of the 14 nm process has still, after 2 years, not caught up with the old 22 nm process and may never do it. The number in the presentation from their chief financial officer collaborates this interpretation: in slide 30, what look looks like Intel's product manufacturing costs are plotted from 2008 to 2016. They decreased steadily from 2008 to 2013 (confirming the trend of a coming technology singularity) but has been flat over last years.

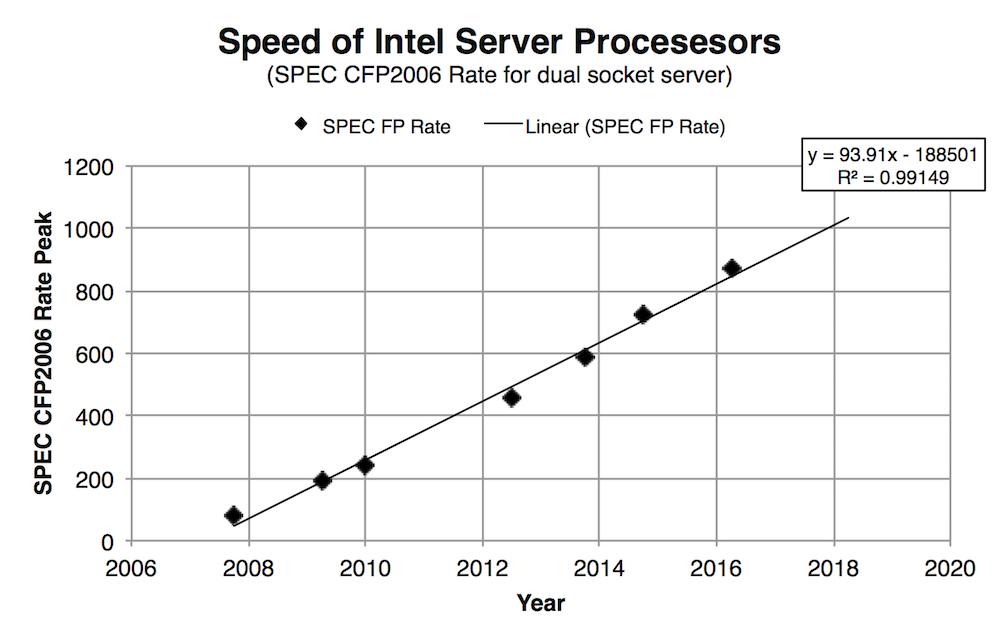

The result is evident when charting actual processor speed metrics over time. Below, I have plotted the widely used SPEC 2006 measurement for an industry standard server using each new generation of server processors coming out of Intel during the last ten years (processor models "Xeon X5460-X5660" and "Xeon E5-2660v2-v4"). These were priced approximately the same, so the speed also represents compute speed per dollar.

The recent development in processor technology is not exponential, as predicted by the singularity hypothesis, but rather very much linear. This implies that if the trend continues, even though the speed has increased tenfold during the last ten years, it will not improve 10x further during the next ten years (to a SPEC FP Rate of 10,000 per server by 2028). Instead, we are likely to have something like 2000 per server, which is still impressive, but not a "Star Trek" scenario.

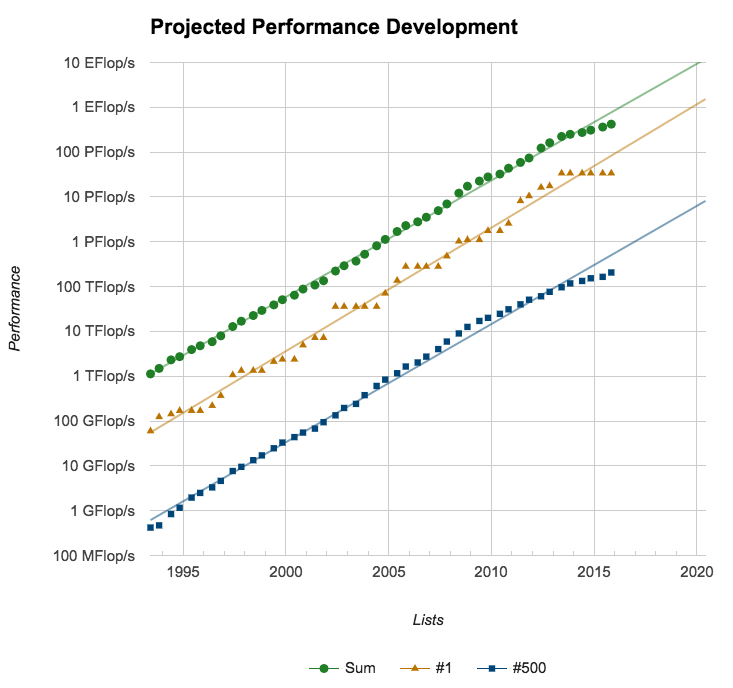

Another observation comes from the cutting edge of computing, the TOP500 list of world's most powerful integrated computer systems, known as supercomputers. The field has a history of delivering an exponential increase in computing power over time, but during the last years the pace of improvement has slowed down, rather than accelerated. In the statistics section of the TOP500 site, there is a performance development follow-up which shows the "Exponential growth of supercomputing power as recorded by the TOP500 list":

The top line "Sum" represent the aggregated computing power of all systems on the TOP500 lists, "#1" is the fastest system (which tend to make up a significant portion of the total), and "#500" is the entry level to get on the list. There seems to be a kink in the line around 2013, when the development started to slow down. It will be interesting to monitor the TOP500 lists coming out in 2016: will they be able to close the gap to the historical trend and continue on the path to the singularity?

I also note that although the underlying processor performance has only improved 10x or so during the last years (according to the chart above), the supercomputing community has been able to their grow capacity by 100x, one order of magnitude more. How is this possible, given that Intel's server processors are the technology actually being used in many of these installations? The explanation is mainly that they have been able to build bigger systems using more processors, which consume more power, presumably as a result of bigger budgets. One can debate whether this should be seen as a positive technological development or not. In a way, it is a good sign that the society has grown so prosperous that it can allocate more resources to science and supercomputing, and thus this development would feed the positive loop leading to the singularity. On the other hand, it shows that technology per se is not delivering what was hoped for, and that eventually the dream of exascale computing and full-brain simulation may be proved a mirage upheld by government stimulus programmes.

Storage

There is analogous observations to Moore's law for hard drives called Kryder's law after Mark Kryder, who was then head of research and development at Seagate. It posits that the storage density of information, usually measured in gigabits or terabits per square inch of storage media grows exponentially over time. The growth rate attributed to Kryder is usually an increase of 40% per year, a prediction that was made in 2009 and reflected the improvements of hard drives during the 90s and 00s. I find it interesting to follow up on this prediction, so below I have plotted the maximum size of 3.5" hard disk drives on the market over the last ten years, starting with the first 1 TB drive in early 2007:

The curve fit is much closer to an exponential curve than a linear one, and the compounded annual growth rate has been approximately 29%. We can see that the hard drive industry has not been able to fully delivery on Kryder's prediction, partly because of the flooding disasters in Thailand in 2011, where many hard drive manufacturing plants where located, but the main conclusion is that the hard drive development is still exponential, albeit at a slower rate.

For the future, the industry roadmap by IDEMA now predicts 25% growth in density per year in the coming decade, arriving at 10 Tb/sq. inch by 2025 using first heat-assisted technology with laser ("HAMR") and then so-called "Heated-Dot Magnetic Recording" technology, which combines HAMR and bit-patterned media. With such technology, 10 times larger hard drives than today would be possible, i.e. up to 100 TB hard drives.

The problem with storage as I see it, though, is not so much that the exponential growth is fading (from 40% down to 25% per year), but rather that the underlying technology is rapidly reaching its inherent limits. The ultimate limit is believed to the superparamagnetic limit of a single magnetic particle, which is estimated to be between 10 to 100 terabit/sq. in., so that 100 TB hard drive might actually be best that can be. It is surely impressive, but it is doubtful to me if that is a sufficient catalyst for a singular leap? Just like silicon semiconductors, hard drives may reach their physical limit in the 2020s, perhaps before the singularity is reached.

Networking

The observation for networking is usually that the amount of traffic across the internet is doubling by some rate, for example, Cisco predicts that global IP traffic in the internet will increase by 23% every year 2014-2019 (i.e. a doubling in roughly 4 years). There is also Nielsen's law of internet bandwidth which predicts that for consumer internet access:

a high-end user's connection speed grows by 50% per year.

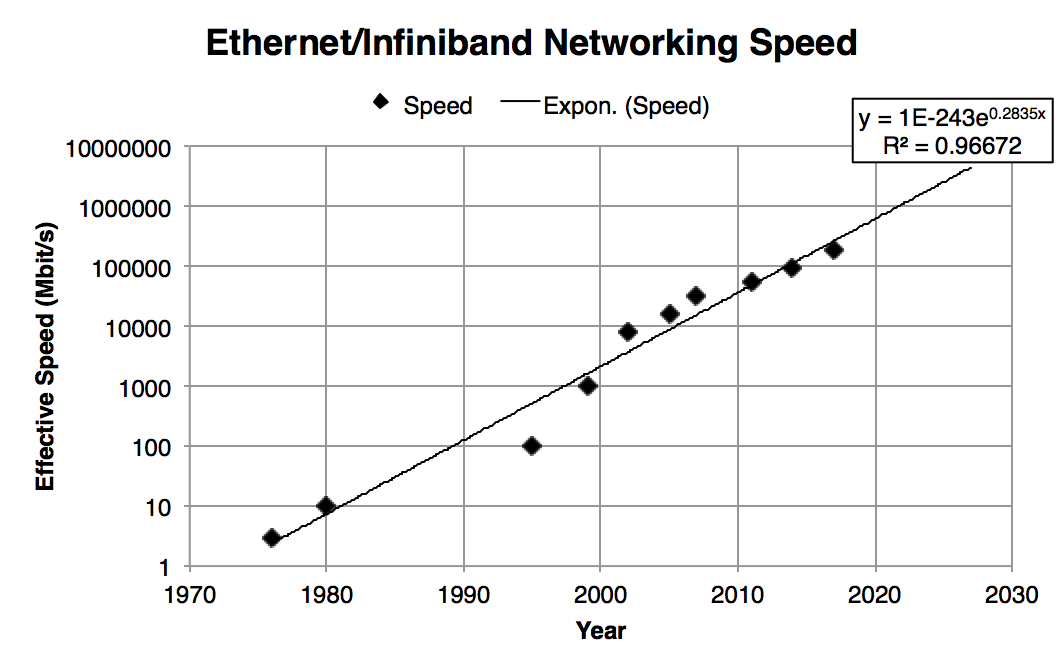

But the growth of the internet is to some extent driven by connecting more people to it and increasing investments, and not only by advances in communication technology. In terms of communication speed, I have looked at the fastest commercially available Ethernet or Infiniband network connection (this is for a single port connection, so I assume that any aggregating backplane is capable of many times this rate). Historically, ethernet networking became available in the 70s with 1-3 megabit/s speed, and today it is possible to get 100 gigabit/s links for the most demanding applications. When plotting over time, the development of networking speed actually looks very promising:

Clearly, networking speed also shows an exponential improvement over several decades. From the graph, I estimate the annualized growth rate to be about 33%/year, which is higher than the growth rate for computing and storage, but lower than the rate in Nielsen's law. We could thus see a development where networking evolves so fast compared to storage so that it becomes inconsequential where the data is stored, because it will be trivial to transfer almost any amount of data to where it is needed.

The question, just as with storage technology, is how much improvement is left out there before some kind of physical limit is hit? There are currently plans for commercial 200 and 400 gigabit/s networking, which will arrive in a few years. 1 terabit/s has been demonstrated, but there are no clear plans for how to delivery it commercially and cost efficiently. Only the next decade's top-of-the-line supercomputers have terabit/s network on the roadmap at this moment. The difference with networking, compared to computing and storage, however, is that the maximum capacity of fiber optic communication technology has been demonstrated to be over 1 petabit/s already. That means that there is no inherent physical limit imminently approaching in same the way.

Summary

It appears that the progress rate of the key components underlying modern computing technology has actually decreased in the recent decade. Most importantly, computer processors, while still improving, have been on a linear trajectory for some time, indicating that Moore's law is coming to an end. This trend is the opposite of what should be happening if we are approaching a singularity event in computer science. In fact, only networking speed is still growing exponentially with an unchanged rate and lots of room to the improve. If one looks at the future potential gains of our current technologies, it looks like processor speed, storage capacity, and networking speed may have a potential gain of 10 to 100x to realize in the coming decade. In my opinion, that will not be sufficient to catalyze a singularity event, such as artificial general intelligence based on full brain-like simulations in the near term.

My hypothesis is instead that continued economic growth, as we experience it now, actually presupposes a technological singularity in the first place. After all, the standard expectation is that the economy will grow by a certain per cent every year. Over long time, that is the same as envisioning an exponential increase in economic activity, which is just another way of saying that singularity is the expected status quo of our current society. It seems to me that the recent improvements in productivity, energy efficiency, automation, machine learning are just being absorbed into the general economy to keep it afloat. Despite all these developments, the global economy has barely kept up and is still growing at a slower rate than during the 1990s/2000s. To me that indicates that the productivity improvements of the singularity is been slowly tapped off to substitute for other slowing factors, such as demographical effects.

Another factor that put restraints on the approaching singularity is that it requires an exponential growth in energy availability. After all, the machines need energy to do their thing, just as humans do. But currently, it looks unlikely that energy availablity will grow exponentially during the next 100 years or so, given the transformation of the energy systems that is necessary to limit carbon dioxide emissions. It would be another thing if, let us imagine, the first wave of commercially available fusion reactors were about to be rolled out over the next 10 years. Instead, we are faced with the problem of retooling a big part of our energy infrastructure.

So in conclusion, by extrapolating the trends seen in computer technology over the last decade, the picture emerges that the singularity is not near and we may, in fact, never approach it unless fundamentally new concepts of computing and storing information are discovered. Of course, that is what every futurist hopes, and speculates about.